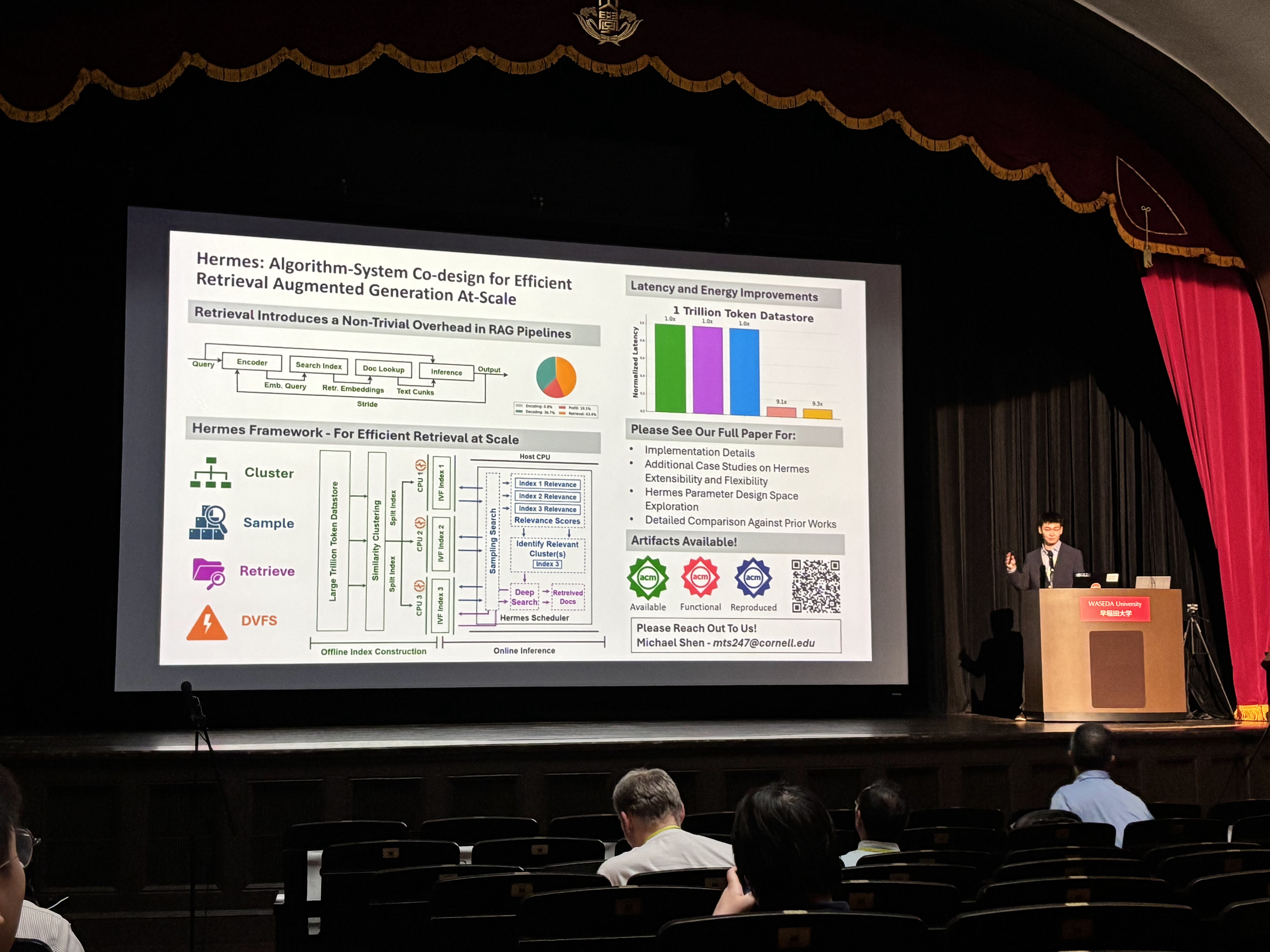

Hermes: Algorithm-System Co-design for Efficient RAG at Scale

June 2024 - June 2025

- Problem: Retrieval Step for RAG introduces tremendous overhead for at-scale datastores.

- Solution: Hermes co-designs algorithms and systems for scalable retrieval.

- Impact: Achieves up to 9.33× latency and 2.10× energy improvements on large-scale RAG.

Abstract

The rapid advancement of Large Language Models (LLMs) as well as the constantly expanding amount of data make keeping the latest models constantly up-to-date a challenge. The high computational cost required to constantly retrain models to handle evolving data has led to the development of Retrieval-Augmented Generation (RAG). RAG presents a promising solution that enables LLMs to access and incorporate real-time information from external datastores, thus minimizing the need for retraining to update the information available to an LLM. However, as the RAG datastores used to augment information expand into the range of trillions of tokens, retrieval overheads become significant, impacting latency, throughput, and energy efficiency. To address this, we propose Hermes, an algorithm-systems co-design framework that addresses the unique bottlenecks of large-scale RAG systems. Hermes mitigates retrieval latency by partitioning and distributing datastores across multiple nodes, while also enhancing throughput and energy efficiency through an intelligent hierarchical search that dynamically directs queries to optimized subsets of the datastore. On open-source RAG datastores and models, we demonstrate Hermes optimizes end-toend latency and energy by up to 9.33× and 2.10×, without sacrificing retrieval quality for at-scale trillion token retrieval datastores.

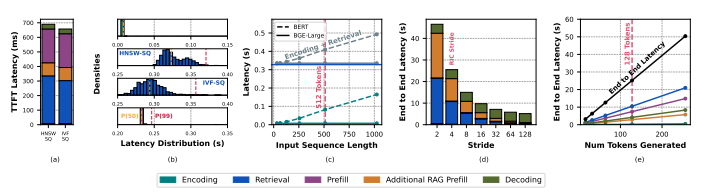

Towards Understanding Systems Trade-offs in Retrieval-Augmented Generation Model Inference

August 2023 - June 2024

- Focus: Analyzing performance tradeoffs of RAG for LLMs.

- Findings: RAG can double inference latency and consume terabytes of storage.

- Contribution: Presents taxonomy and characterization of RAG systems for LLMs.

Abstract

The rapid increase in the number of parameters in large language models (LLMs) has significantly increased the cost involved in fine-tuning and retraining LLMs, a necessity for keeping models up to date and improving accuracy. Retrieval-Augmented Generation (RAG) offers a promising approach to improving the capabilities and accuracy of LLMs without the necessity of retraining. Although RAG eliminates the need for continuous retraining to update model data, it incurs a trade-off in the form of slower model inference times. Resultingly, the use of RAG in enhancing the accuracy and capabilities of LLMs often involves diverse performance implications and trade-offs based on its design. In an effort to begin tackling and mitigating the performance penalties associated with RAG from a systems perspective, this paper introduces a detailed taxonomy and characterization of the different elements within the RAG ecosystem for LLMs that explore trade-offs within latency, throughput, and memory. Our study reveals underlying inefficiencies in RAG for systems deployment, that can result in TTFT latencies that are twice as long and unoptimized datastores that consume terabytes of storage.

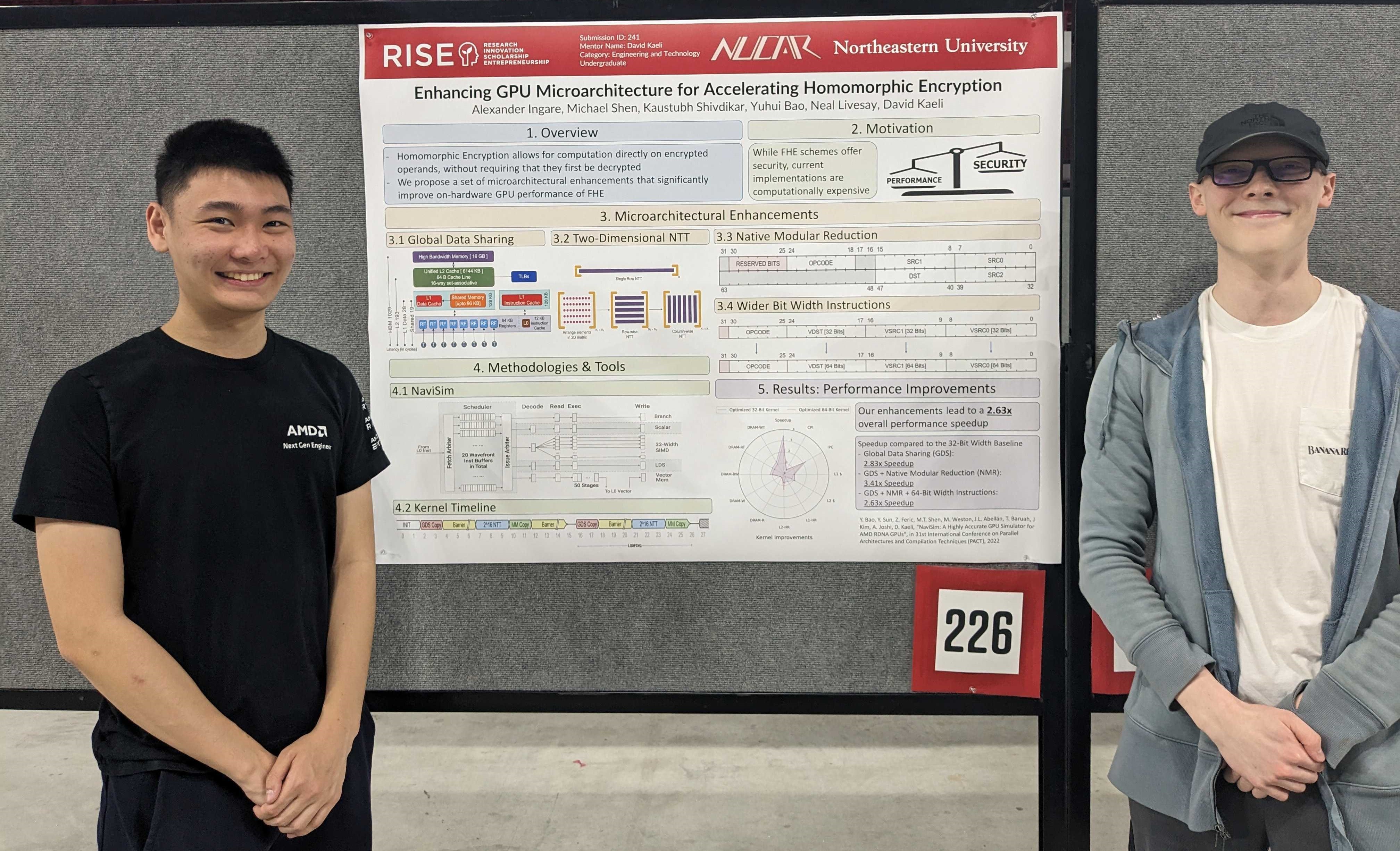

GME: GPU-based Microarchitectural Extensions to Accelerate Homomorphic Encryption

November 2022 - April 2023

- Challenge: FHE enables computation on encrypted data but is computationally expensive.

- Approach: Simulated architectural changes to improve FHE performance on AMD GPUs.

Abstract

Fully Homomorphic Encryption (FHE) enables the processing of encrypted data without decrypting it. FHE has garnered significant attention over the past decade as it supports secure outsourcing of data processing to remote cloud services. Despite its promise of strong data privacy and security guarantees, FHE introduces a slowdown of up to five orders of magnitude as compared to the same computation using plaintext data. This overhead is presently a major barrier to the commercial adoption of FHE. In this work, we leverage GPUs to accelerate FHE, capitalizing on a well-established GPU ecosystem available in the cloud. We propose GME, which combines three key microarchitectural extensions along with a compile-time optimization to the current AMD CDNA GPU architecture. First, GME integrates a lightweight on-chip compute unit (CU)-side hierarchical interconnect to retain ciphertext in cache across FHE kernels, thus eliminating redundant memory transactions. Second, to tackle compute bottlenecks, GME introduces special MOD-units that provide native custom hardware support for modular reduction operations, one of the most commonly executed sets of operations in FHE. Third, by integrating the MOD-unit with our novel pipelined 64-bit integer arithmetic cores (WMAC-units), GME further accelerates FHE workloads by 19%. Finally, we propose a Locality-Aware Block Scheduler (LABS) that exploits the temporal locality available in FHE primitive blocks. Incorporating these microarchitectural features and compiler optimizations, we create a synergistic approach achieving average speedups of 796×, 14.2×, and 2.3× over Intel Xeon CPU, NVIDIA V100 GPU, and Xilinx FPGA implementations, respectively Top Pick in Hardware and Embedded Security

MOTION: MAV Operated Tunnel Inspection using Neural Networks

June 2022 - December 2022

- System: Developed drone-based tunnel inspection using R-CNN and SLAM.

- Innovation: Integrated sensor suite for crack detection in GPS-denied environments.

Abstract

Recent infrastructure collapses, such as the MBTA’s Government Center collapse, have highlighted the importance of safe and efficient methods for evaluating critical infrastructure. To combat this issue, we have developed a small Unmanned Aerial System that can detect and evaluate the risks associated with hazardous fractures within tunnel walls. In our proposed solution we are leveraging Region-based Convolutional Neural Networks (R-CNN) for an applied mask in computer vision, Simultaneous Localization And Mapping (SLAM) for global navigation in GPSdenied tunnels, and an integrated sensor suite for visualizing and interpreting crack integrity while maintaining flight capabilities in remote environments. With these techniques, we have the capability to deploy a tool that provides insightful analysis of various civil infrastructure evaluations to expert civil engineers. With the developed system we hope to provide the industrial and academic communities with a prototyped system that can help mitigate the impact of cracks in vulnerable infrastructure. 1st Place ECE Capstone Project

NaviSim: A Highly Accurate GPU Simulator for AMD RDNA

June 2020 - April 2022

- Contribution: Developed NaviSim, the first cycle-level simulator for AMD RDNA GPUs.

- Result: Achieved kernel execution time accuracy within 9.92% of real hardware.